Memory Partitions and NUMA Configuration Options

- Using ZST to Configure the System to use Zing Memory Partitions for the Java Heap

- Running Zing Using Zing Memory Partitions for the Java Heap

- Zing Memory Partitions Default Command Configuration

- Actively Configuring Zing Memory Partitions

- Configuring ZST Partitions and Running Zing instances on Specific Nodes

- Setting up Performance Testing

- NUMA Viewing and Commands

This document describes how to configure and use Azul Zing Builds of OpenJDK (Zing) memory partitions on a non-uniform memory access (NUMA) server. A Zing memory partition is constructed using system memory from a single node or set of nodes. A Zing memory partition provides a JVM instance with the memory used for the JVM’s Java heap.

Zing Memory Partitions for the Java Heap Overview

Azul Zing System Tools (ZST) implements a feature to support deployment of Java applications that require the performance and lower latency benefits provided by using a subset of the nodes (processor sockets and their associated memory) on a NUMA server. The Zing Memory Partitions feature enables the creation of a single Zing Memory Partition or multiple Zing Memory Partitions one of which supplies the system memory for a Zing instance’s Java heap. Together with the CPU and non-Java heap partitioning controlled by Linux command line arguments, you can now configure your entire Java process to use processing and memory resources local to a single processor socket or set of processor sockets. Zing memory partitions may span multiple nodes.

A NUMA architecture server consists of two or more processor sockets where each processor socket is directly connected to its own physical memory. Each socket is interconnected with one or more sockets in a NUMA configuration and the server BIOS and Operating System (OS) are configured to support NUMA. NUMA Support must be enabled in the server BIOS and the Operating System (OS) for Zing Memory Partitions to work.

In a multi-socket system, the memory connected directly to a processor socket is referred to as local node memory. From that same socket, memory connected to a different processor socket is referred to as remote node memory. There is higher memory-access latency and lower memory access speeds for a core accessing memory on a remote node compared to accessing memory on a local node.

Using ZST to Configure the System to use Zing Memory Partitions for the Java Heap

Zing Memory Partitions are configured with respect to your server’s nodes. You can select nodes for use by specific memory partitions by using nodemasks when you configure your Zing Memory Partition using ZST commands. You can also subdivide the memory in a single node so that the memory in that single node can be used by multiple Zing Memory Partitions. For examples, see pmem.conf.example.

Running Zing Using Zing Memory Partitions for the Java Heap

Use the -XX:AzMemPartition=partition-number command-line argument to specify that Zing should use the memory from Memory Partition partition-number for the Java heap memory. The origin of the non-Java heap memory and CPUs to use will still need to be configured using the Linux command line options to complete the association of all the computational resources with a specific node or set of nodes.

Zing Memory Partitions Default Command Configuration

Zing memory partitions are configured with the command:

system-config-zing-memory

Accepting the defaults for the command configures the system as follows:

-

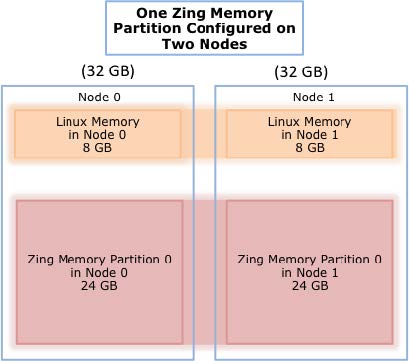

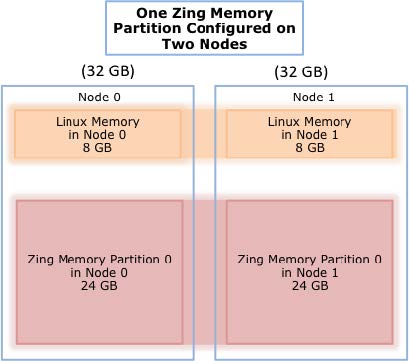

Creates a single Zing Memory Partition across all nodes. By default, this is Zing Memory Partition 0, as depicted as Zing Memory Partition 0 shown in the One Zing Memory Partition Configured on Two Nodes figure below.

-

On start-up of Zing, without use of the

-XX:AzMemPartitioncommand line option, a Zing instance’s Java heap will use memory from Zing Memory Partition 0 which, with this configuration includes memory from all nodes.

Because Zing’s Java Heap uses memory from all of the nodes, the overall memory access times and data throughput will be an average of the access times and data throughput you would obtain if accessing local or remote node memory exclusively.

Default settings for the ZST Zing Memory configuration command allocate a default Zing Memory Partition with 75% of the available system memory (where the system memory is all of RAM on the machine).

Example: A single Zing Memory Partition allocated across two nodes.

64 GB system memory with two 32 GB nodes, node 0 with 32 GB of memory connected to socket 0 and node 1 with 32 GB memory connected to socket 1

Command:

Run the system-config-zing-memory command and accept all defaults.

Result:

Zing Memory Partition 0 is allocated across Node 0 and Node 1 as illustrated below.

The GC log files present metrics in the text format. See the following examples:

Actively Configuring Zing Memory Partitions

Configuring Two Zing Memory Partitions

When configuring partitions, you are required to have a Zing Memory Partition named Zing Memory Partition 0.

In some cases, you may want to consider creating Zing Memory Partition 0 as a small partition to run Java commands. Allocating 2 GB allows Java tools like jstat and jmap to run with Zing’s default Java Heap size of 1 GB.

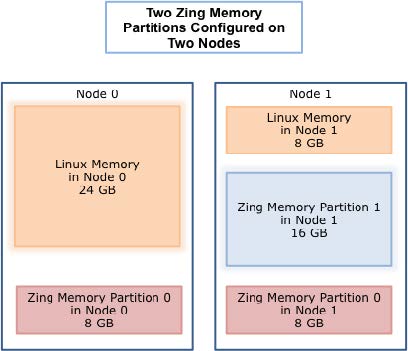

Example: Two Zing Memory Partitions, one on a single node and one across two nodes.

64 GB system memory with two 32 GB nodes, node 0 with 32 GB of memory connected to socket 0 and node 1 with 32 GB memory connected to socket 1

Zing Memory Partitions: two Zing Memory Partitions each with 16 GB total.

-

One Zing Memory Partition uses memory from each of the two nodes

-

One Zing Memory Partition uses memory from only one node

This corresponds to the following two partitions and is depicted in the figure below:

-

Zing Memory Partition 0 8 GB from Node 0 and 8 GB from Node 1

-

Zing Memory Partition 1 16 GB from Node 1 (the local node for the cores in socket 1)

The number used for the name of the node and the number used for the Zing Memory Partition are independently specified. The node numbering is defined in the Linux OS. The Zing Memory Partition numbers are assigned by you when you run the system-config-zing-memory command. Therefore, in the above example, it is possible that Zing Memory Partition 1 could be defined to use memory exclusively from node 0. Or in a four-socket server, Zing Memory Partition 2 could be set up to use memory exclusively from node 3, if that showed the best performance when the application was benchmarked.

Setting up Zing Memory Partition 0

To reconfigure a system that has already been configured using the default configuration, first reconfigure Zing Memory Partition 0. Run the system-config-zing-memory command and make the following choices.

# system-config-zing-memory --add-partition 0

Welcome to the Zing memory configuration wizard by Azul Systems.

This wizard sets up the Zing memory configuration file

/etc/zing/pmem.conf.0

and initializes System Zing memory. Read the man pages for zing, zing-zst,

and zing-pmem-conf for more information about this configuration file.)

Use of System Zing Memory by Java processes running on the Zing requires

you to configure memory in advance. This is analogous to reservation of memory

for Linux hugepages, although System Zing Memory pages are not interchangeable

with hugepages.

For most environments running Java applications on the Zing, Azul recommends

configuring your system with 25% System Linux Memory and 75% System Zing Memory.

Choose yes to accept this default, or no to enter the wizard expert flow.

** accept default configuration

** (y)es or (n)o [default 'y']: n

---------------------------------------------------------------------------

[ Current task: Sizing Zing memory / Choosing sizing method ]

Zing requires you to partition memory up front for exclusive use by

Java. (This is analogous to Linux hugepages, although Zing pages

are not interchangeable with hugepages.)

Would you like to decide how much memory to allocate to Zing based

on the total size of your system, or based on the total size of the

Java instances you expect to run?

** Enter (s)ystem or (j)ava heap size [default 's']: s

---------------------------------------------------------------------------

[ Current task: Sizing Zing memory / Choosing system sizing method ]

Do you want to allocate a percentage of your total system memory to

Zing, or would you prefer to specify an exact amount?

** Enter (p)ercentage or (e)xact [default 'p']: p

** Enter percentage of total memory to dedicate to Zing [default '75']: 30

---------------------------------------------------------------------------

[ Current task: Choose memory reservation policy ]

You can reserve all of the requested System Zing Memory now using the

reserve-at-config policy or you can reserve the System Zing Memory for

each Zing process at process launch using the reserve-at-launch policy.

If you choose reserve-at-launch then the amount of memory reserved in the

previous step will be the upper limit available for all Zing\'s to use.

Which reservation policy do you want to use?

** Enter reserve-at-(c)onfig or reserve-at-(l)aunch [default 'c']: c

---------------------------------------------------------------------------

[ Current task: Sizing Zing memory / Sizing Contingency memory ]

Part of Zing memory is set aside in a common contingency memory pool for all Azul

Java processes to share. The contingency memory pool exists as an insurance

policy to allow a JVM instance to temporarily avoid OOM behavior and

grow beyond -Xmx.

What percentage of Zing memory would you like to dedicate to the

contingency memory pool?

** Enter percentage of Zing memory to be used for contingency memory [default '5']:

---------------------------------------------------------------------------

[ Current task: Saving configuration and initializing Zing memory ]

Info: Zing Memory reserved using reserve-at-config policy.

Info: azulPmemPages: 21802.

INFO: az_pmem_reserve_pages (num2mPages 21802) succeeded

INFO: az_pmem_fund_transfer (to 7, from 0, bytes 45722107904) succeeded

INFO: az_pmem_fund_transfer (to 1, from 7, bytes 2285895680) succeeded

INFO: az_pmem_fund_transfer (to 3, from 7, bytes 2285895680) succeeded

INFO: az_pmem_fund_transfer (to 0, from 7, bytes 41150316544) succeeded

Info: You can now run Java processes up to -Xmx39244m or -Xmx38g.

Info: Azul pmem initialized successfully.

The default Nodemask ‘ALL’ in the example above causes the ZST to create Zing Memory Partition 0 using memory from both node 0 and node 1. Specifying 0x1 as the Nodemask would cause the ZST to create Zing Memory Partition 0 using system memory from only node 0.

Setting up Zing Memory Partition 1

To continue the example, configure the second Zing Memory Partition, Zing Memory Partition 1, by running the system-config-zing-memory command again and make the following choices.

# system-config-zing-memory --add-partition 1

Welcome to the Zing memory configuration wizard by Azul Systems.

This wizard sets up the Zing memory configuration file

/etc/zing/pmem.conf.1

and initializes System Zing memory. Read the man pages for zing, zing-zst,

and zing-pmem-conf for more information about this configuration file.)

Use of System Zing Memory by Java processes running on the Zing requires

you to configure memory in advance. This is analogous to reservation of memory

for Linux hugepages, although System Zing Memory pages are not interchangeable

with hugepages.

For most environments running Java applications on the Zing, Azul recommends

configuring your system with 25% System Linux Memory and 75% System Zing Memory.

Choose yes to accept this default, or no to enter the wizard expert flow.

** accept default configuration

** (y)es or (n)o [default 'y']: n

---------------------------------------------------------------------------

[ Current task: Sizing Zing memory / Choosing sizing method ]

Zing requires you to partition memory up front for exclusive use by

Java. (This is analogous to Linux hugepages, although Zing pages

are not interchangeable with hugepages.)

Would you like to decide how much memory to allocate to Zing based

on the total size of your system, or based on the total size of the

Java instances you expect to run?

** Enter (s)ystem or (j)ava heap size [default 's']: s

---------------------------------------------------------------------------

[ Current task: Sizing Zing memory / Choosing system sizing method ]

Do you want to allocate a percentage of your total system memory to

Zing, or would you prefer to specify an exact amount?

** Enter (p)ercentage or (e)xact [default 'p']: p

** Enter percentage of total memory to dedicate to Zing [default '75']: 30

---------------------------------------------------------------------------

[ Current task: Choose memory reservation policy ]

You can reserve all of the requested System Zing Memory now using the

reserve-at-config policy or you can reserve the System Zing Memory for

each Zing process at process launch using the reserve-at-launch policy.

If you choose reserve-at-launch then the amount of memory reserved in the

previous step will be the upper limit available for all Zing\'s to use.

Which reservation policy do you want to use?

** Enter reserve-at-(c)onfig or reserve-at-(l)aunch [default 'c']: c

---------------------------------------------------------------------------

[ Current task: Sizing Zing memory / Sizing Contingency memory ]

Part of Zing memory is set aside in a common contingency memory pool for all Azul

Java processes to share. The contingency memory pool exists as an insurance

policy to allow a JVM instance to temporarily avoid OOM behavior and

grow beyond -Xmx.

What percentage of Zing memory would you like to dedicate to the

contingency memory pool?

** Enter percentage of Zing memory to be used for contingency memory [default '5']:

---------------------------------------------------------------------------

[ Current task: Configuring Zing memory / Configuring MemoryUseNodemask ]

You can configure Zing to use memory from a specific node (socket) or set of

nodes (sockets) on the system.

Enter the number in hexadecimal format.

For Example, 0x1 == node 0, 0x2 == node 1, 0x3 == node 0 and 1.

On which nodes do you want to reserve Zing memory ?

** Enter MemoryUseNodemask [default 'ALL']:

---------------------------------------------------------------------------

[ Current task: Saving configuration and initializing Zing memory ]

Info: Zing Memory reserved using reserve-at-config policy.

Info: azulPmemPages: 21802.

INFO: az_pmem_reserve_pages (num2mPages 21802) succeeded

INFO: az_pmem_fund_transfer (to 7, from 0, bytes 45722107904) succeeded

INFO: az_pmem_fund_transfer (to 1, from 7, bytes 2285895680) succeeded

INFO: az_pmem_fund_transfer (to 3, from 7, bytes 2285895680) succeeded

INFO: az_pmem_fund_transfer (to 0, from 7, bytes 41150316544) succeeded

Info: You can now run Java processes up to -Xmx39244m or -Xmx38g on partition 1.

Info: Azul pmem initialized successfully.

Also, unless you specify a Nodemask to select the nodes that you want to use, then, the Zing Memory Partition will use the default value 'ALL' and use memory from all of the nodes.

Setting Nodemask for a Zing Memory Partition

The Nodemask is a hexadecimal value that corresponds to a binary value used as a bit mask to select a corresponding node or set of nodes to use as the source of the memory for the Zing Memory Partition. The default Nodemask is ALL. The default directs the ZST to create the Zing Memory Partition to use memory from all nodes.

For example, for a four-socket server, using the default Nodemask, ALL will be interpreted by system-config-zing-memory as the hexadecimal value 0xF, binary value 1111, and will create the Zing Memory Partition with one quarter of the total memory request for the Zing Memory Partition coming from each node across all four nodes, 0-3.

For an eight-core server, a Nodemask of ALL is interpreted as hexadecimal 0xFF, binary value 11111111.

The following table provides examples of the mapping of hexadecimal to binary values; and binary values to the corresponding set of nodes.

| Hexadecimal | Binary | Nodes specified by Nodemask | |

|---|---|---|---|

nodemask |

0x1 |

1 |

Node 0 only |

nodemask |

0x2 |

10 |

Node 1 only |

nodemask |

0x3 |

11 |

Nodes 0 and node 1 |

nodemask |

0x9 |

1001 |

Nodes 0 and 3 |

nodemask |

0xC |

1100 |

Nodes 2 and 3 |

nodemask |

0xE |

1110 |

Nodes 1, 2 and 3 |

nodemask |

0xF |

1111 |

Nodes 0, 1, 2 and 3 |

nodemask |

0xFF |

11111111 |

Nodes 0 thru node 7 |

Viewing stdout After Configuring and Restarting the Zing Memory Service

When you complete configuring Zing Memory Partitions, restart the Zing memory service. The following is the report after configuring Zing Memory Partitions 0 and 1.

Zing Memory Partition 0 has 2GB and Zing Memory Partition 1 has 100GB

# service zing-memory restart

zing-memory: INFO: Restarting...

zing-memory: INFO: Stopping...

zing-memory: INFO: stop successful zing-memory: INFO: Starting...

Info: azulPmemPages: 1136.

INFO: az_pmem_reserve_pages (num2mPages 1136) succeeded

INFO: az_pmem_fund_transfer (to 7, from 0, bytes 2382364672) succeeded

INFO: az_pmem_fund_transfer (to 1, from 7, bytes 117440512) succeeded

INFO: az_pmem_fund_transfer (to 3, from 7, bytes 117440512) succeeded

INFO: az_pmem_fund_transfer (to 0, from 7, bytes 2147483648) succeeded

Info: You can now run Java processes up to -Xmx2048m or -Xmx2g on partition 0.

Info: azulPmemPages: 56888. INFO: az_pmem_reserve_pages (num2mPages 56888) succeeded

INFO: az_pmem_fund_transfer (to 7, from 0, bytes 119302782976) succeeded

INFO: az_pmem_fund_transfer (to 1, from 7, bytes 5964300288) succeeded

INFO: az_pmem_fund_transfer (to 3, from 7, bytes 5964300288) succeeded

INFO: az_pmem_fund_transfer (to 0, from 7, bytes 107374182400) succeeded

Info: You can now run Java processes up to -Xmx102400m or -Xmx100g on partition 1.

Info: Azul pmem initialized successfully.

zing-memory: INFO: start successful

zing-memory: INFO: restart successful

Running Zing JVMs using Zing Memory Partitions

Zing does not support the -XX:+UseNUMA option yet. Adding this option can even reduce performance, see Zing Virtual Machine Release Notes for details.

-XX:AzMemPartition Specifies Which Zing Memory Partition to Use for the Java Heap

Use the Zing Java command line option, -XX:AzMemPartition, to specify Zing JVM which Zing Memory Partition should be used as the source of memory for the Java Heap.

-

-XXAzMemPartition=<num>- Where<num>is the number of a Zing Memory Partition that was configured using the ZST memory configuration commands.

Memory Partition 0, which, when using the default configuration, uses memory from all nodes.

A Zing Memory Partition uses memory from specific nodes. To make this memory be local node memory, you will need to start Zing such that it uses the cores on that node. One way to do this is to use the Linux numactl command. If your Zing Memory Partition spans more than one node, then Zing can be started to use the cores on all of the nodes that will be used for the Zing Partition Memory.

numactl Specifies Processor Socket and Node for the Zing Process

If you specify that a process should use Zing Memory from a specific node or set of nodes then it makes sense to use the cores on those same nodes so that the memory will be the memory from local nodes. Using memory from local nodes for Zing’s and Linux’s Memory will lower memory access overhead and improve application performance. One way to do this is to use the numactl command with the --cpunodebind and` --membind` command line arguments.

Zing runs on Linux as a process. To run Zing as a process on the cores on processor socket 1 (node 1 in the numactl nomenclature) and to use the Linux memory only from node 1:

$ numactl --cpunodebind=1 --membind=1 java -XX:AzMemPartition=1 {javacommand-line-options}

This numactl command uses:

-

--cpunodebind=1- To use only the cores in node 1. -

--membind=1- To use Zing memory from Zing Memory Partition 1 where we have previously configured Zing Memory Partition 1 to use memory from node 1.

The numactl command runs java with this Zing command line option:

-

-XX:AzMemPartition=1- To use only the cores in node 1.

Assuming that the machine is configured as in Configuring Two Zing Memory Partitions, if the -XX:AzMemPartition Java command line option is not specified then Zing’s Java Heap memory will come from both node 0 and node 1 because Zing hasn’t been instructed to create the Java Heap using memory only from node 1.

Example: Run on Zing Memory Partition 0 (Default Configuration)

Zing Memory Partition 0, when using the default configuration, uses memory from all of the nodes, so we do not need to use the operating system numactl command or the -XX:AzMemPartition command line option to specify that the JVM should use memory from Zing Memory Partition 0:

java {java-command-line-options}

Note that this is equivalent to:

numactl --cpunodebind=0,1 --membind=0,1 java -XX:AzMemPartition=0 {javacommand-line-options}

Example: Run on Zing Memory Partition 1 using numactl

Assume that you have created a Zing Memory Partition named 1 that has been configured to only use memory from node 1. In this case, Zing Memory Partition 1 needs to be specified by using the -XX:AzMemPartition=1 command line option; and because Zing Memory Partition 1 uses the memory in node 1 then we need to use the numactl Linux command in order for Zing’s process to run on cores in node 1 so that the Java Heap memory will be local memory. Likewise, we also want to use the numactl Linux command to use local memory in node 1 for Zing’s Linux memory. To do both of these, we specify that we should use the cores in node 1 with the option --cpunodebind=1 and specify that the memory in node 1 should be used for Linux memory with the option --membind=1:

numactl --cpunodebind=1 --membind=1 java -XX:AzMemPartition=1 {java-commandline-options}

Checking the Java Application After Startup

Always check your Zing application after startup to ensure that it is behaving as you expected:

-

Use

topto be certain that it is running on the cores that you think it should be using. To view the individual cores in use and the threads executing, type in these options in the window runningtop: z 1 H -

Use

zing-ps –sto be certain that it is running using the Zing Partition Memory that you think it should be using. -

Run

numactl --hardwareto view the “free” memory for the node that the Zing Memory Partition is using; the free memory for that node should have been reduced by the same amount as the memory used on that node by the Zing Memory Partitions.

Configuring ZST Partitions and Running Zing instances on Specific Nodes

Viewing the Partition pmem.conf File

Every Zing Memory Partition has a pmem.conf.N file, where N is the Zing Memory Partition number. The files are in the /etc/zing directory. Do not manually edit these files.

-

The default Zing Memory Partition is Partition 0 and the pmem.conf file is symlinked to pmem.conf.0 file.

-

The nodemask value that specifies the node or nodes used when configuring the Zing Memory Partition is in the partition’s pmem.conf.N file.

When you configured the Partitions, you had the option to specify a hexadecimal number to select the nodes to use for each Partition. See Setting Nodemask for a Zing Memory Partition.

Managing Partitions Using the system-config-zing-memory Command

The system-config-zing-memory command options that apply to Zing Memory Partitions are listed below.

Usage:

system-config-zing-memory [OPTIONS]

| Option | Where N is a partition number between 0 – 15 |

|---|---|

--add-partition N |

INFO: Add Zing Memory Partition N |

--stop-partition N |

Stop all running applications on the partition before stopping the partition. \# system-config-zing-memory --stop-partition 1 INFO: az_pmem_unreserve_pages (num2mPages 18151) succeeded Info: Partition 1 successfully stopped. |

--start-partition N |

Info: azulPmemPages: 18151. INFO: az_pmem_reserve_pages (num2mPages 18151) succeeded INFO: az_pmem_fund_transfer (to 7, from 0, bytes 38065405952) succeeded INFO: az_pmem_fund_transfer (to 1, from 7, bytes 1902116864) succeeded INFO: az_pmem_fund_transfer (to 3, from 7, bytes 1902116864) succeeded INFO: az_pmem_fund_transfer (to 0, from 7, bytes 34261172224) succeeded Info: You can now run Java processes up to -Xmx32674m or -Xmx31g on partition 1. Info: Azul pmem initialized successfully. Info: Partition 1 successfully started. |

--restart-partition N |

Info: Partition 1 successfully stopped. Info: azulPmemPages: 18151. INFO: az_pmem_reserve_pages (num2mPages 18151) succeeded INFO: az_pmem_fund_transfer (to 7, from 0, bytes 38065405952) succeeded INFO: az_pmem_fund_transfer (to 1, from 7, bytes 1902116864) succeeded INFO: az_pmem_fund_transfer (to 3, from 7, bytes 1902116864) succeeded INFO: az_pmem_fund_transfer (to 0, from 7, bytes 34261172224) succeeded Info: You can now run Java processes up to -Xmx32674m or -Xmx31g on partition 1. Info: Azul pmem initialized successfully. Info: Partition 1 successfully started. Info: Partition 1 successfully restarted. |

--delete-partition N |

Info: Partition 1 successfully deleted. |

Viewing Partition Status Using the zing-ps Command

The zing-ps command options that apply to Zing Memory Partitions are listed below:

-

-p <pid>– Specific process PID to print info about. -

-s– Prints the memory summary. -

-partition P– Prints memory accounting information for Partition P. Where P is the partition number between 0 – 15.

Setting up Performance Testing

This section describes an example performance testing scenario with a desired testing environment and how to configure Zing memory partitions within the testing environment. To run the system-config-zing-memory command you need root access permission on the server.

Performance Testing Environment

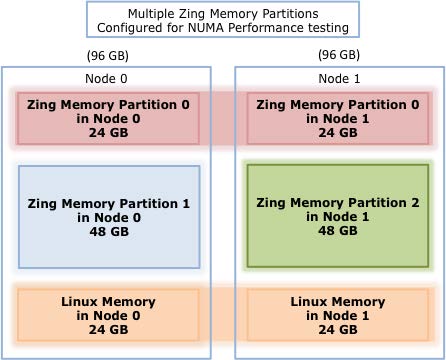

In this example, set up a system with three Zing Memory Partitions so that you can compare the benchmark results for running Zing when the memory for the Java Heap comes from node 0, node 1, or from both nodes. The scenario assumes that you will want to switch between the different memory configurations, trying different combinations of nodes, without needing to reconfigure the machine.

Configuring the Zing Memory Partitions

To accomplish this, create three different partitions on a system where 192 GB of system memory (RAM) are available on the machine. Overall, the machine will use 144 GB for Zing Memory (75% of system memory) and 48 GB for Linux Memory (25% of system memory). To make the various configurations easier to compare, create three equally sized Zing Memory Partitions, so each will be 48 GB.

Test the following three configurations in which Zing’s Java Heap memory uses memory from:

| Environment | Partition name | Memory to use for Java heap |

|---|---|---|

1 |

Zing Memory Partition 0 |

node 0 and node 1 |

2 |

Zing Memory Partition 1 |

node 0 exclusively |

3 |

Zing Memory Partition 2 |

node 1 exclusively |

The illustration below depicts three Zing Memory Partitions configured on a server with 192GB of system memory:

Running using the Configured Zing Memory Partition

Following a reboot of your system, so that you can start each run with a known configuration, you can run Zing using the memory from Zing Memory Partition 0 for the Java Heap and using the cores on processor sockets 0 and 1 and the Linux memory on nodes 0 and 1 with the following command:

$ java -Xmx40g {java-command-line-options}

This is equivalent to the command:

$ numactl --cpunodebind=0,1 --membind=0,1 java -XX:AzMemPartition=0 -Xmx40g {javacommand-line-options}

To run Zing using the memory from Zing Memory Partition 1 for the Java Heap and using the cores on node 0 and the Linux memory on node 0 use the following command:

$ numactl --cpunodebind=0 --membind=0 java -XX:AzMemPartition=1 -Xmx40g {javacommand-line-options}

To run Zing using the memory from Zing Memory Partition 2 for the Java Heap and using the cores on node 1 and the Linux memory on node 1 use the following command:

$ numactl --cpunodebind=1 --membind=1 java -XX:AzMemPartition=2 -Xmx40g {javacommand-line-options}

NUMA Viewing and Commands

Viewing Node and CPU Numbers

To view the node and CPU numbers seen by the operating system:

# cd /sys/devices/system/node

# ls

node0 node1

# ls node1

cpu1 cpu13 cpu15 cpu3 cpu5 cpu7 cpu9 cpu11 cpumap distance meminfo numastat

Using the numactl Hardware Command

Depending on operating system, kernel, and BIOS settings, the numactl command can provide the node and CPU numbers.

Example: A Dell box with Hyper-Threading Disabled

[root@system ~]# numactl --hardware

available: 2 nodes (0-1)

node 0 cpus: 0 2 4 6 8 10 12 14

node 0 size: 65490 MB

node 0 free: 63290 MB

node 1 cpus: 1 3 5 7 9 11 13 15

node 1 size: 65536 MB

node 1 free: 63289 MB

node distances:

node 0 1

0: 10 20

1: 20 10

Example: An HP DL380 G8

dl380 G8

dl380> numactl --hardware

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7

node 0 size: 8157 MB

node 0 free: 7326 MB

node 1 cpus: 8 9 10 11 12 13 14 15

node 1 size: 8191 MB

node 1 free: 7746 MB

node distances:

node 0 1

0: 10 20

1: 20 10

Example: A two-socket Dell PowerEdge R710 with NUMA Disabled

Using an E5645 with 24 CPUs. and Node Interleave set in the BIOS.

Dell PowerEdge R710/00NH4P, BIOS 6.2.3 04/26/2012

numactl –-hardware

[29] available: 1 nodes (0)

[29] node 0 size: 48446 MB

[29] node 0 free: 11800 MB

[29] node distances:

[29] node 0

[29] 0: 10

[29] 0

|

Note

|

This output only shows a single node even though there are two-sockets. This server has the BIOS setting Node Interleave enabled so there is no NUMA being presented to the kernel. |

Example: A two socket X5660 numactl Without Node Details

numactl –-hardware

[29] available: 2 nodes (0-1)

[29] node 0 size: 18157 MB

[29] node 0 free: 16253 MB

[29] node 1 size: 18180 MB

[29] node 1 free: 17039 MB

[29] node distances:

[29] node 0 1

[29] 0: 10 20

[29] 1: 20 10

[29] 0

More Detailed View of CPUs Using lscpu Command

# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 40

On-line CPU(s) list: 0-39

Thread(s) per core: 1

Core(s) per socket: 10

CPU socket(s): 4

NUMA node(s): 4

. . . . . . . .

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 30720K

NUMA node0 CPU(s): 0,4,8,12,16,20,24,28,32,36

NUMA node1 CPU(s): 2,6,10,14,18,22,26,30,34,38

NUMA node2 CPU(s): 1,5,9,13,17,21,25,29,33,37

NUMA node3 CPU(s): 3,7,11,15,19,23,27,31,35,39

Determining the Location of Task’s Memory Allocation

To determine a task’s memory allocation location, examine the /proc/<pid>/numa_maps file; this displays each memory object for a particular task.

The following example shows the entries for the current shell’s heap and stack:

[root@tux ~]# grep –e heap –e stack /proc/$$/numa_maps

0245f000 default heap anon=65 dirty=65 active=60 N1=65

7fff23318000 default stack anon=7 dirty=7 N1=7

-

The first field of row is start of the virtual memory address (VMA) range (for example,

0245f000or7fff23318000) -

The second field is memory allocation policy (for example, default is equal to the system default policy)

-

Third field is the path to the mapped file or the use of the shared memory segment (in this example, heap or stack)

-

The

anon=anddirty=show the number of pages -

The

N<node>=shows the number of pages allocated from each<node>

Show NUMA Policy Settings of the Current Process

The numactl –-show command option displays the current memory allocation policy and preferred node. The command needs to be run in the process for which you want to display information. You can confirm the list of valid CPUs and node numbers for other numactl flags such as --physcpubind, --cpubind, and --membind.

For example, the following command binds a shell, its children processes and associated memory objects to node 1, and the numactl --show command displays the policy settings:

[root@tux ~]# numactl --membind=1 --cpunodebind=1 bash

[root@tux ~]# numactl --show

policy: bind

preferred node: 1

physcpubind: 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31

cpubind: 1

nodebind: 1

membind: 1